I’m really impressed with Vaughn Tan’s writing on uncertainty. He points out that most organizations confuse unknowns with “risks”, which blinds us to the real nature of uncertainty.

Risks mean we know possible worst-case scenarios and how to plan for each. Everything is measurable. But treating uncertainty like risk stops us from seeing the problem clearly before solving it. If uncertainty was truly a risk, we’d be able to predict the LA fires that happened in January 2025. Truth is, we didn’t know that would’ve happened.

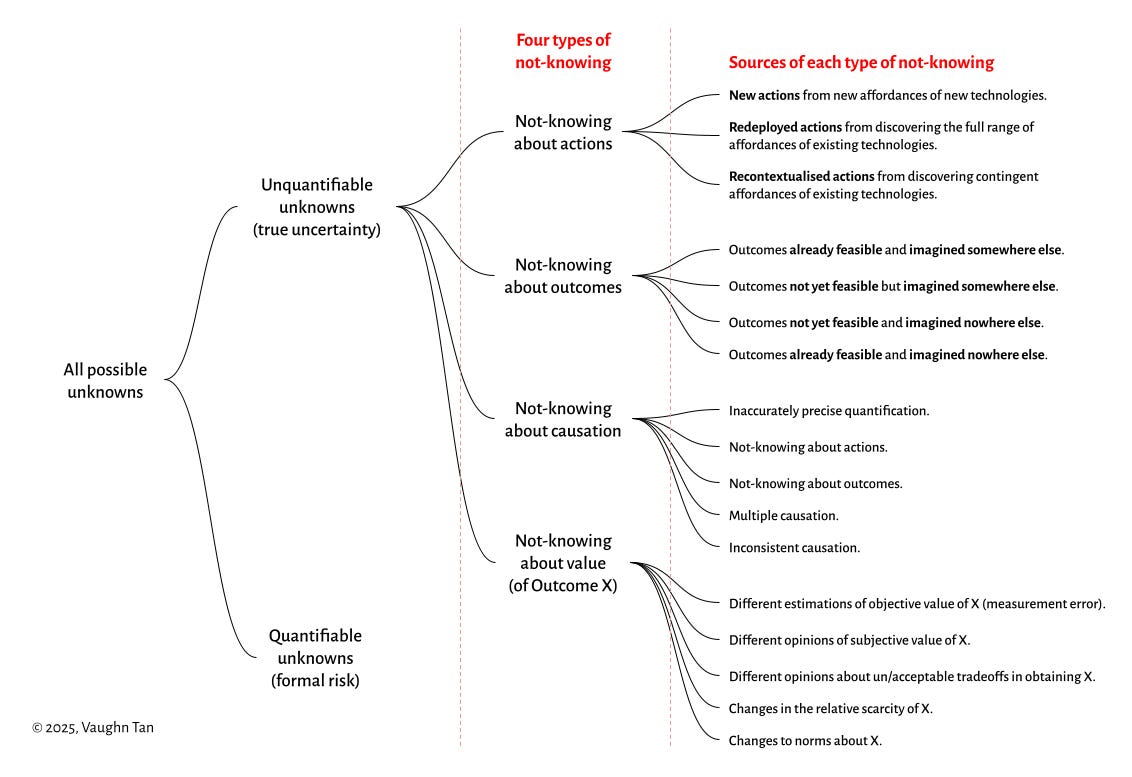

Vaughn defines four types of unknowns, or as he calls them, four types of non-risk non-knowings:

Not-knowing about actions: This arises from uncertainty about what actions or possibilities new and existing technologies offer. For example, when a company faces a new technology, it may be unclear what can actually be done with it or how to deploy it effectively. The broad approach here is to run many small, intentionally diverse experiments as a portfolio to explore the space of possible actions and discover new affordances.

Not-knowing about outcomes: This involves uncertainty about what outcomes can be achieved or are even imaginable. Outcomes may be feasible but not yet imagined, or imaginable but not yet feasible. For known outcomes, experimentation through portfolios works well. For novel or unprecedented outcomes, structured imaginative techniques such as speculative fiction or scenario planning help expand what’s considered possible.

Causal not-knowing: This concerns uncertainty about how actions lead to outcomes, including when multiple causes exist or causality is inconsistent. When causal relationships are resolvable, traditional hypothesis testing and structured experiments apply. When not resolvable, it may be more effective to try many varied small interventions and optimise for directional movement rather than precise control (“stacking the deck”).

Not-knowing about values: This type deals with uncertainty about how much an outcome is worth—subjective and often contested judgments that can change over time or differ across stakeholders. Approaches include reasoning, argumentation, negotiation, and setting broad, flexible goals that can accommodate diverse values and changing norms.

Why does this matter?

These four types help us recognize which unknown we’re dealing with, so we can design smarter experiments.

I’ve always approached org design and strategy as experiments: What level of risk will we take to learn X?

Vaughn’s thinking refines that to:

What risk will we take to learn about a specific action, outcome, causation, or value?

Experiments aren’t just throwing spaghetti at the wall. They’re about clarifying what we don’t know, what we want, and how to move toward it.

I’ve used this statement with teams, which I got from Lean Change Management:

By [implementing this change]

We will see [this result/outcome]

As [measured by these metrics]

With Vaughn’s four types of unknowns, we can go deeper. Not just “will we see this result?” but “what specific unknown will we learn more about?”

Here’s a new hypothesis sketch:

By [implementing this change]

We expect to learn more about:

Action – what is actually possible here?

Outcome – what might be achievable or imaginable?

Causation – how (or if) this action will lead to that result?

Value – what is this result worth to us/others?

As measured by [these signals or indicators]

And we’ll know we’ve learned something when [this happens].

Tagging experiments by their type of unknown keeps us honest about what we don’t know—and stops us from treating a foggy unknown like a clear risk.

It also lets us layer learning: an experiment about action might reveal unexpected outcomes or shift our sense of value. Naming the type upfront sets us up to see pivots as part of the journey, not surprises to fix.

Maybe this helps teams focus on what’s really going on rather than what we think is going on.

Example Experiments

Just to pressure test this updated hypothesis statement, let’s ask GPT-5! Hey chat, give me four example experiments for each unknowing type using this new hypothesis statement.

1. 4-day workweek pilot

By launching a 4-day workweek trial for one team over six weeks

We expect to learn about: Action — what operational changes and workflows actually work in a compressed schedule without sacrificing output.

Measured by: project delivery rates, employee feedback, and customer satisfaction.

We’ll know we’ve learned something when: we have a clear sense of which tasks and processes adapt well, what blockers arise, and where support or redesign is needed.

2. Cross-functional product team

By restructuring two teams into one team for a quarter

We expect to learn about: Causation — does tighter integration speed releases?

Measured by: time to launch, handoffs, clarity of roles in retrospectives.

We’ll know we’ve learned when: a clear pattern links structure to speed or quality emerges.

3. Onboarding completion through progress bar tracking

By adding progress tracking bar to onboarding for three months

We expect to learn about: Outcome — whether more users will complete onboarding and thus, we see increased retention.

Measured by: completion rate, session length, churn rate.

We’ll know we’ve learned something when: completion rate increases/decreases, churn rate decreases/increases

4. Pay-what-you-want workshop pricing

By running a pay-what-you-want experiment

We expect to learn about: Value — what participants are willing to pay when given choice.

Measured by: payment distribution, sign-ups, feedback.

We’ll know we’ve learned when: the data shifts our pricing conversation—validating, challenging, or revealing new tiers.

Not bad, robot.

This is still early thinking!

Of course, the true test of this lies in the work. I’m excited to try this in my own work with clients.

In a world where “being able to navigate ambiguity” is seen as a core competency, being clear on what we don’t know and designing how to learn is a core practice.

Have you approached experiments in this way? How have you helped your teams navigate the unknown?

What else I’m reading

I’ve always thought about the role of luck in climbing, careers, and even organizations. Luck plays a huge role in the outcomes we want. Cate Hall says we should increase our surface area for luck.

Navigating by aliveness — “will this job, project, trip, or opportunity take me in the direction of aliveness or away from it?” A beautiful question.

Clay’s Five OD Things is so well-synthesized. You should subscribe.

The product design talent crisis. It’s a tough time to be a newer product designer right now. On why companies should invest in different ways to grow their junior designers.

And relatedly… Designers! Designers! Designers! “What companies want isn’t just a designer, but someone who can own outcomes end-to-end: set direction, make decisions without committee drag, and ship.” A good explainer by Carly on why the talent marketplace is flooded and yet there is still demand for senior design talent.